HAProxy on Linux server is an open‑source, high‑performance load balancer and reverse proxy that distributes traffic across multiple backend servers for reliability, scalability, and security.

It supports Layer 4 (TCP) and Layer 7 (HTTP/HTTPS), health checks, SSL termination, sticky sessions, and observability, making it a production‑grade choice for web apps, APIs, and microservices.

In this beginner‑friendly guide, you’ll understand HAProxy on Linux servers from the ground up: what it does, how it works, how to install it on Ubuntu/Debian and RHEL‑based distros, and how to configure it for real‑world workloads. We’ll also cover best practices, troubleshooting, and when to choose HAProxy over alternatives.

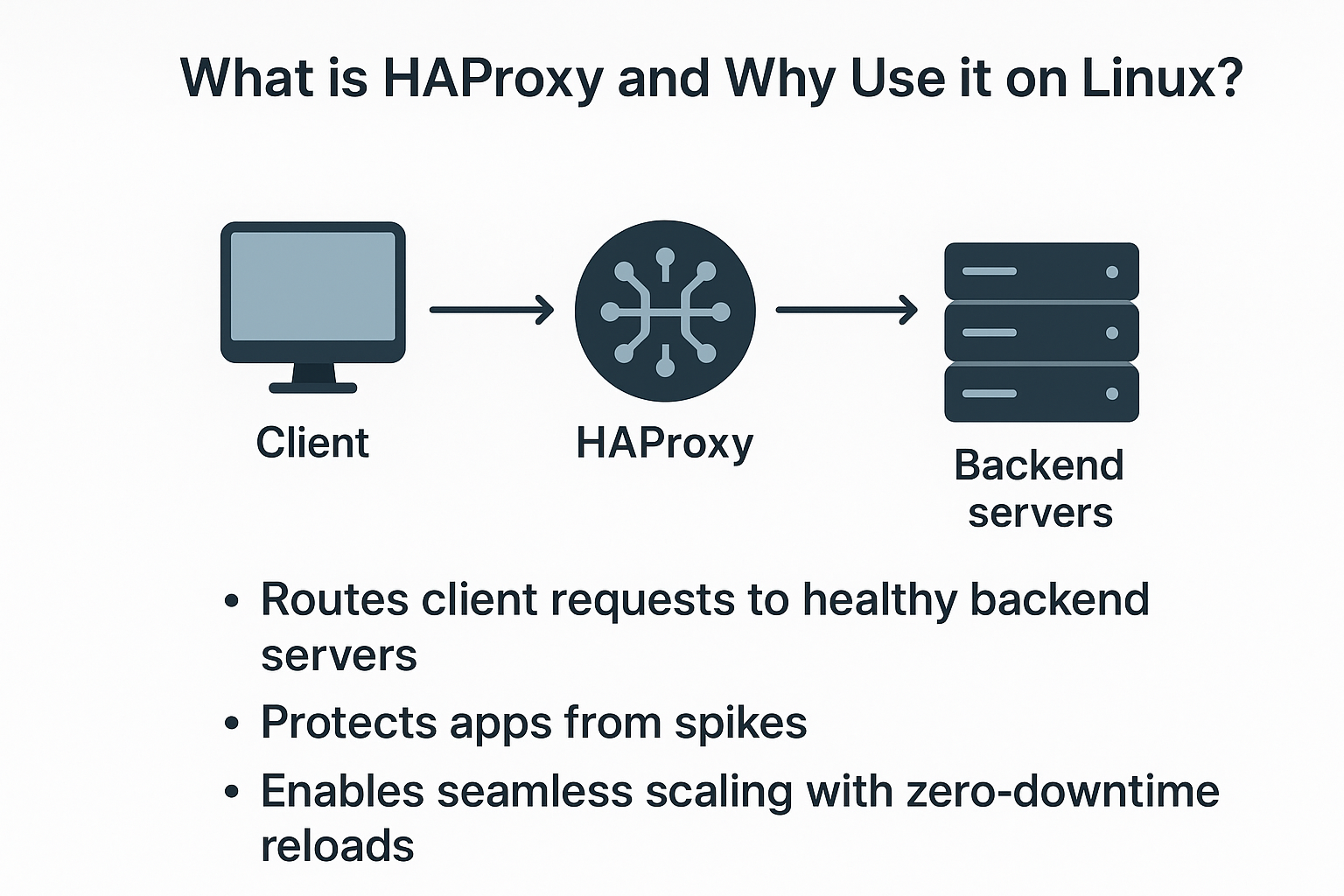

What is HAProxy and Why Use it on Linux?

HAProxy (High Availability Proxy) is a battle‑tested, open‑source load balancer and reverse proxy. On Linux, it’s prized for stability, low latency, and throughput.

It routes client requests to healthy backend servers, protects apps from spikes, and enables seamless scaling with zero‑downtime reloads.

Core capabilities you’ll use

- Layer 4 and Layer 7 load balancing (TCP, HTTP/HTTPS).

- Flexible algorithms: roundrobin, leastconn, source (sticky), and more.

- Health checks, circuit breaking, and per‑server weights.

- SSL/TLS termination, HTTP/2, HSTS, and SNI routing.

- ACLs and routing logic (path, host, headers, Geo/IP).

- Sticky sessions via cookies or source IP.

- Logging, stats page, and Prometheus‑friendly exporters.

- High availability with VRRP (keepalived) and stateful stick‑tables.

How HAProxy works (frontends, backends, ACLs)

HAProxy listens for client connections on frontends (e.g., ports 80/443) and forwards requests to backends (your app servers). ACLs (Access Control Lists) let you route by hostname, URL path, methods, or headers—for example, send /api to one pool and /images to another. Health checks continually verify which backends are safe to receive traffic.

Install HAProxy on Linux (Ubuntu/Debian, RHEL/CentOS/Rocky/Alma)

Ubuntu/Debian

sudo apt update

sudo apt install -y haproxy

haproxy -v

sudo systemctl enable --now haproxy

sudo systemctl status haproxy --no-pagerRHEL/CentOS/AlmaLinux/Rocky Linux

sudo dnf install -y haproxy # or: sudo yum install -y haproxy

haproxy -v

sudo systemctl enable --now haproxy

sudo systemctl status haproxy --no-pagerMost distros ship a stable HAProxy. For the latest features (HTTP/3, newer TLS, advanced stick‑tables), consider official repositories or building from source per the HAProxy documentation.

Basic HAProxy Configuration (Step‑by‑Step)

The main configuration file is usually at /etc/haproxy/haproxy.cfg. Below is a production‑ready starting point that supports HTTP to HTTPS redirect, HTTPS termination, health checks, sticky sessions, and a password‑protected stats page.

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

user haproxy

group haproxy

daemon

maxconn 4000

tune.ssl.default-dh-param 2048

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5s

timeout client 30s

timeout server 30s

retries 3

option http-keep-alive

# HTTP frontend: redirect to HTTPS

frontend fe_http

bind *:80

http-request redirect scheme https code 301

# HTTPS frontend with TLS termination and HSTS

frontend fe_https

bind *:443 ssl crt /etc/haproxy/certs/example.pem alpn h2,http/1.1

http-response set-header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

default_backend be_app

# Backend with health checks and sticky sessions

backend be_app

balance roundrobin

option httpchk GET /health

http-check expect status 200

cookie SRV insert indirect nocache

server app1 10.0.0.11:8080 check cookie s1

server app2 10.0.0.12:8080 check cookie s2

# Stats dashboard

listen stats

bind :8404

stats enable

stats uri /stats

stats auth admin:StrongPassword!Replace example.pem with a full‑chain PEM that includes your certificate and private key. If you’re using Let’s Encrypt, you can combine files or point directly to the issued PEM bundle.

Key directives explained

- global: process‑wide settings (logging, user, maxconn).

- defaults: base settings inherited by frontends/backends (mode, timeouts).

- frontend: binds to a client‑facing address/port; applies ACLs and routing decisions.

- backend: a pool of servers with a load‑balancing algorithm.

- listen: combined frontend+backend section (commonly used for stats or TCP services).

- ACLs: rules for routing/denials based on path, host, headers, methods, IPs, and more.

Common Use Cases for HAProxy on Linux

HTTP load balancing strategies

- roundrobin: even distribution; good default.

- leastconn: favors backend with the fewest active connections; good for slow or stateful apps.

- source: hashes client IP to keep them on the same server (simple stickiness when cookies aren’t possible).

SSL termination and redirects

Terminate TLS at HAProxy to offload crypto from your app servers and manage certificates centrally. Redirect all HTTP to HTTPS for security and SEO. You can also pass TLS through if you need end‑to‑end encryption—HAProxy supports both modes.

Reverse proxy for microservices and WordPress

Use ACLs to route api.example.com to an API pool and www.example.com to a WordPress pool. For WordPress, enable sticky sessions only if plugins require them; otherwise, keep sessions stateless and share media via a CDN or NFS/object storage.

Security, Observability, and High Availability

Security hardening

- Run as the

haproxyuser, chroot to/var/lib/haproxy, and keep file permissions tight. - Use strong TLS (disable TLS 1.0/1.1), set secure ciphers, enable HSTS, and keep OpenSSL updated.

- Hide version strings; never expose the stats page publicly without auth and IP allowlists.

- Rate‑limit abusive IPs with stick‑tables and ACLs.

- Restrict management ports with firewalls and security groups.

Example: simple rate limiting with stick‑tables

frontend fe_https

bind *:443 ssl crt /etc/haproxy/certs/example.pem

# Track connection rate per IP

stick-table type ip size 100k expire 10m store conn_rate(10s)

http-request track-sc0 src

acl too_fast sc0_conn_rate gt 100

http-request deny if too_fast

default_backend be_appLogging and monitoring

- Enable HTTP logs and ship to

rsyslogor journald. Analyze with ELK/Graylog. - Use the stats page on port 8404 (password‑protected) for real‑time metrics.

- Export metrics via HAProxy’s Prometheus exporter or parse logs for alerts.

- Trace errors with

journalctl -u haproxy -fand thehaproxy -cconfig check.

High availability (no single point of failure)

Run two HAProxy nodes and float a virtual IP using keepalived (VRRP). One node is master; the other takes over on failure. Use configuration management and synchronized certificates for fast failover. For zero‑downtime config changes, use systemctl reload haproxy after validating with haproxy -c -f /etc/haproxy/haproxy.cfg.

Performance tuning tips

- Match nbthread to CPU cores and consider cpu-map pinning.

- Raise maxconn carefully and tune kernel limits (

fs.file-max,somaxconn). - Use SO_REUSEPORT and modern schedulers; keep timeouts realistic to avoid stuck connections.

- Pick the right algorithm (

leastconnfor slow backends,roundrobinfor general use).

Troubleshooting HAProxy on Linux

Common issues and quick fixes

- Cannot bind to port 80/443: Another service is listening. Check with

ss -ltnp | grep :80. Stop or change the conflicting service. - Backends always DOWN: Health check path/status mismatch. Validate with

curlfrom the HAProxy host to backend IPs; open firewalls. - TLS errors: Use a proper PEM bundle (key + cert + chain). Verify permissions and SNI configuration.

- SELinux denials (RHEL): Temporarily test with

setenforce 0, then set booleans (e.g.,setsebool -P haproxy_connect_any 1) instead of disabling SELinux. - Reload fails: Run

haproxy -c -f /etc/haproxy/haproxy.cfgto validate beforesystemctl reload haproxy.

Helpful commands

# Validate config

sudo haproxy -c -f /etc/haproxy/haproxy.cfg

# Watch logs

sudo journalctl -u haproxy -f

# Check listeners

ss -ltnp | grep haproxy

# Version & build options (features compiled in)

haproxy -vvHAProxy vs NGINX vs Envoy: When to Choose What

- HAProxy: Exceptional performance, mature L7 features, rich ACLs, stick‑tables, and robust health checks. Great for classic and modern web workloads.

- NGINX: Strong web server + reverse proxy combo; great for static content and simple proxying. Advanced load‑balancing often requires NGINX Plus.

- Envoy: Modern proxy focused on service meshes, HTTP/2/3, gRPC, and xDS control planes; ideal for cloud‑native microservices at scale.

If your primary need is high‑performance load balancing with deep routing logic and proven stability, HAProxy is a safe default on Linux.

Real World Tips from 15+ Years in Hosting

- Keep timeouts short but realistic; long timeouts mask problems and tie up connections.

- Prefer health endpoints like

/healththat perform a lightweight DB/cache check. - Implement canary backends with lower weight for safe rollouts.

- Use per‑environment configs (dev/stage/prod) and test with

-cbefore pushing changes. - Automate certificate renewals (e.g., Certbot hooks) and reload HAProxy gracefully.

FAQ’s – HAProxy on Linux Server

1. What is HAProxy used for on a Linux server?

HAProxy is a load balancer and reverse proxy that distributes client traffic across multiple backend servers. On Linux, it improves availability, performance, and security for websites, APIs, and microservices by providing health checks, SSL termination, routing rules, and observability.

2. How do I install HAProxy on Ubuntu?

Run sudo apt update && sudo apt install -y haproxy, then sudo systemctl enable --now haproxy. Verify with haproxy -v and adjust /etc/haproxy/haproxy.cfg. Validate config using haproxy -c -f /etc/haproxy/haproxy.cfg before reloading.

3. Can HAProxy terminate SSL and redirect HTTP to HTTPS?

Yes. Bind HTTPS with your certificate (PEM) and configure a redirect in the HTTP frontend. You can also enforce HSTS and HTTP/2. For end‑to‑end encryption, use TCP mode or re‑encrypt to backends with their own certificates.

4. How do I enable sticky sessions in HAProxy?

Add a cookie directive in the backend (e.g., cookie SRV insert) and assign consistent cookies to each server (e.g., server app1 ... cookie s1). Alternatively, use balance source for IP‑based stickiness when cookies aren’t available.

5. Is HAProxy better than NGINX for load balancing?

Both are excellent. HAProxy excels in advanced L7 load balancing, ACLs, stick‑tables, and health checks; NGINX combines a web server with robust proxying.

For specialized load balancing and high throughput, HAProxy is often preferred on Linux; for serving static files plus basic proxying, NGINX is strong.