If your goal is to truly understand load balancer solutions on a Linux server, this guide is designed for you. Here, you’ll learn how load balancers work, the types available, popular open-source options, configuration basics, and their real-world benefits for web servers, applications, and microservices—all explained in straightforward language.

What Is a Load Balancer?

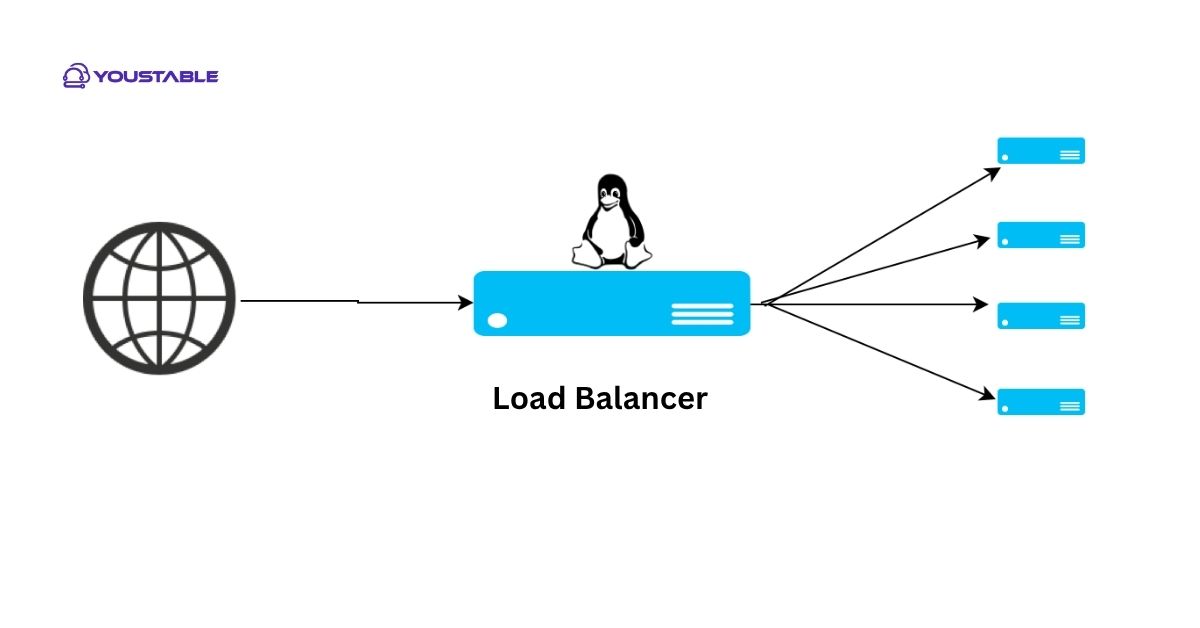

A load balancer is a system or software component that automatically distributes incoming network traffic across multiple backend servers. Its primary goal is to ensure no single server bears too much load, leading to improved performance, reliability, and uptime for your Linux-based services.

Why Use a Load Balancer on Linux?

- Optimize resource utilization: Prevent server overload and make the most of your hardware.

- Increase service availability: If one server fails, traffic is seamlessly routed to healthy servers.

- Scale applications easily: Add or remove servers without downtime.

- Enhance security: Hide backend servers and add SSL termination.

How Do Load Balancers Work?

When a client (such as a web browser or mobile app) requests a service, the load balancer decides which server will handle the request using a specific algorithm. Key algorithms include:

- Round Robin: Requests go to each server in turn.

- Least Connections: Directs new connections to the server with the fewest current connections.

- Source IP Hash: Keeps a client assigned to the same server for session persistence.

Load balancers often include health checks to automatically detect and bypass failed servers, further ensuring reliability.

Types of Load Balancers on Linux Servers

Load balancers help distribute incoming traffic across multiple servers, improving reliability, performance, and fault tolerance. On Linux systems, several types of load balancers offer flexibility based on your infrastructure size, budget, and traffic needs.

Software Load Balancers

Run as software (instead of dedicated hardware) and are highly flexible and customizable. They are popular due to their cost-efficiency and adaptability.

- Examples: NGINX, HAProxy, Traefik, Envoy, Seesaw.

- Advantages: Cost-effective, easy to automate, and integrate well with modern cloud platforms.

Hardware Load Balancers

Physical appliances are optimized for high throughput. Used by large enterprises for very high-performance needs; less common in typical Linux server environments.

Virtual Load Balancers

Run as virtual machines or containers, offering features of software balancers but with added portability.

Popular Software Load Balancers for Linux

| Load Balancer | Layer Support | Key Features | Use Case |

|---|---|---|---|

| HAProxy | L4/L7 (TCP/HTTP) | High performance, flexible | High-traffic websites, APIs |

| NGINX | L7 (HTTP/HTTPS) | Reverse proxy, scalability | Web servers, microservices |

| Traefik | L7, cloud native | Auto-discovery, metrics | Docker/Kubernetes, microservices |

| Envoy | L4/L7 | Service mesh, HTTP/2, GRPC | Microservices, modern apps |

| Seesaw | L4 | Designed by Google, simple | Internal traffic, infra load |

Load Balancing Layers: L4 vs L7

- Layer 4 (L4): Balances traffic at the transport layer (TCP/UDP level), best for raw speed, less aware of application content.

- Layer 7 (L7): Operates at the application layer (HTTP, HTTPS), can inspect, route based on application data, manage complex rules, and rewrite URLs.

How to Deploy and Configure a Load Balancer on Linux

Setting up a load balancer on a Linux server improves uptime and distributes workload efficiently across backend servers. This section walks you through the basic deployment and configuration steps.

- Install Your Chosen Load Balancer (e.g., HAProxy, NGINX)

On Debian/Ubuntu:

sudo apt update

sudo apt install haproxy

sudo apt install nginx- Basic Configuration Example (HAProxy)

A typical configuration in /etc/haproxy/haproxy.cfg:

frontend http_front

bind *:80

default_backend http_back

backend http_back

balance roundrobin

server server1 192.168.1.10:80 check

server server2 192.168.1.11:80 check- Enable and Start the Service

sudo systemctl enable haproxy

sudo systemctl start haproxy- Test and Monitor

Use tools like curl, web browsers, or load testing utilities. Load balancers often include dashboards or logs for traffic and health monitoring.

Real-World Use Cases for Linux Load Balancers

Linux load balancers are used across industries to improve performance, reliability, and scalability. Here are some practical scenarios where they shine:

- Web hosting: Distribute traffic across a farm of web servers for large websites or SaaS platforms.

- APIs and microservices: Route traffic to available service instances, crucial for modern, cloud-native applications.

- Database clustering: Load balance read requests among replication slaves.

- Mail servers and FTP: Balance connection loads for improved reliability and redundancy.

Security and Best Practices

Following best practices ensures your Linux load balancer setup is secure, efficient, and scalable. Here are the key tips:

- Always run health checks: Avoid sending users to failing servers.

- Hide backend details: Use the load balancer as a reverse proxy to limit direct exposure of your infrastructure.

- Enable SSL termination: Offload HTTPS to your balancer, then forward traffic internally.

- Scale as needed: Add or remove backend servers dynamically for traffic spikes.

FAQ: Understand Load Balancer

What is a load balancer, and why do I need it on my Linux server?

A load balancer intelligently distributes network traffic across multiple servers, preventing overload on any single server. This redundancy improves uptime, system reliability, scalability, and can enhance user experience by reducing latency. It’s a must-have for anyone running more than one backend server in production environments.

Which open-source load balancer is best for my Linux server?

The best choice depends on your use case. HAProxy and NGINX are widely adopted for their performance, flexibility, and active communities. For containerized or cloud-native environments, Traefik and Envoy are top picks because of their advanced integration and metrics capabilities.

How does a load balancer know if a server fails?

Load balancers conduct regular health checks (such as ping or HTTP requests) to determine if backend servers are healthy. If a server fails, traffic is routed to other available servers until the issue is resolved, ensuring uninterrupted service delivery.

Does using a load balancer affect website speed or SEO?

Properly configured, a load balancer improves overall speed and reliability by preventing server crashes and managing traffic bursts. It also assists with delivering rapid, consistent responses and supports HTTPS, which is favored by search engines and helps maintain good SEO results.

Can load balancers secure my Linux server applications?

Yes. Load balancers can terminate SSL/TLS traffic, prevent direct access to backend servers, and provide another layer of defense against common attacks. Coupled with firewalls and security best practices, they form an essential part of a robust security posture.

Conclusion

To understand load balancer technology on Linux servers is to gain control over server uptime, scaling, and performance. Whether you manage a blog, e-commerce site, or an enterprise application, modern load balancers provide seamless traffic distribution, reliability, and security. Open-source options like HAProxy, NGINX, Traefik, and Envoy make it accessible to everyone—no special hardware needed. Optimize your infrastructure today by learning, testing, and deploying a load balancer, and watch your Linux server environment thrive.