A Pandas DataFrame is a two dimensional, tabular data structure in Python with labeled rows and columns, designed for fast data cleaning, analysis, and transformation. Think of it as an in memory spreadsheet with powerful indexing, selection, and aggregation features.

Below, you’ll see Pandas DataFrame explained with examples that you can copy, run, and adapt. Pandas DataFrame is the core building block of data analysis in Python. It makes importing, cleaning, transforming, and summarizing data easy and efficient.

In this guide, I’ll explain what a DataFrame is, how to create it, and how to work with it backed by practical examples and best practices from real analytics and engineering projects.

What is a Pandas DataFrame?

A Pandas DataFrame is a 2D labeled table where:

- Columns can hold different data types (int, float, string, datetime, categorical).

- Rows and columns have labels (index and column names) for fast, human readable access.

- Vectorized operations enable fast arithmetic and transformations across columns.

DataFrames are built on top of Series (1D arrays) and integrate closely with NumPy for performance. Compared to plain Python lists or CSV handling, DataFrames offer built in tools for missing values, joins, group operations, and analytics.

Pandas DataFrame vs Series vs NumPy Array

- Series: 1D labeled array (like a single column).

- DataFrame: 2D labeled table of multiple Series aligned by index.

- NumPy array: Fast, homogeneous n-dimensional array (no column labels or mixed types).

Choose DataFrame for labeled, mixed type tabular data; NumPy arrays for pure numeric, same type data; Series for single columns or features.

How to Create a DataFrame (With Examples)

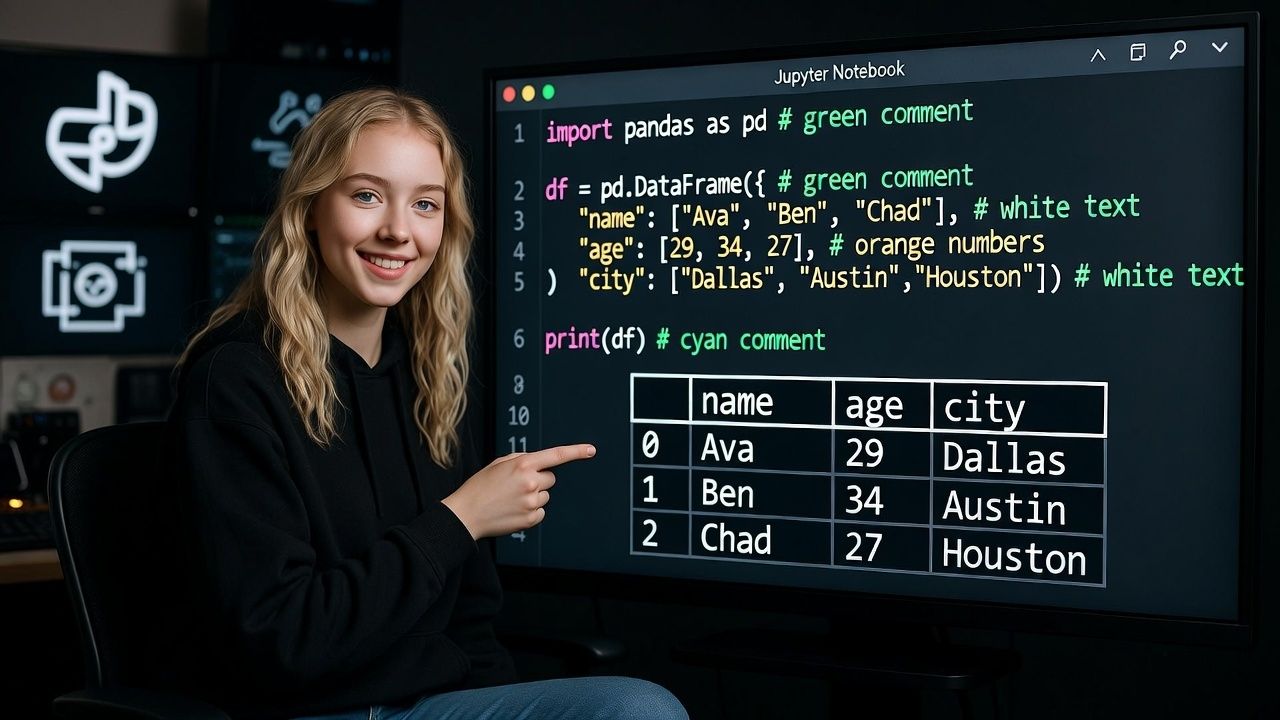

1. From Python dictionaries or lists

import pandas as pd

data = {

"name": ["Ava", "Ben", "Chad"],

"age": [29, 34, 27],

"city": ["Dallas", "Austin", "Houston"]

}

df = pd.DataFrame(data)

print(df)This is the simplest way to build a small DataFrame for testing or demos.

2. From CSV, Excel, JSON, or SQL

# CSV

df = pd.read_csv("sales.csv")

# Excel

df_xls = pd.read_excel("sales.xlsx", sheet_name="Q1")

# JSON

df_json = pd.read_json("data.json")

# SQL (requires an engine, e.g., SQLite or PostgreSQL)

from sqlalchemy import create_engine

engine = create_engine("sqlite:///app.db")

df_sql = pd.read_sql("SELECT * FROM orders", engine)Use these readers in real projects, especially for larger datasets and production pipelines.

3. From NumPy arrays or Series

import numpy as np

arr = np.array([[1, 2], [3, 4]])

df_np = pd.DataFrame(arr, columns=["a", "b"])

print(df_np)When data starts numeric and unlabelled, add column names immediately for clarity and downstream compatibility.

Selecting, Indexing, and Filtering Data

.loc vs .iloc

# Sample setup

df = pd.DataFrame({

"name": ["Ava", "Ben", "Chad", "Dana"],

"age": [29, 34, 27, 31],

"score": [85, 92, 88, 79]

}, index=["u1", "u2", "u3", "u4"])

# Label-based selection with .loc

print(df.loc["u2", "score"]) # row label "u2", column "score"

print(df.loc["u1":"u3", ["name","age"]]) # slice by labels

# Position-based selection with .iloc

print(df.iloc[1, 2]) # second row, third column (0-based)

print(df.iloc[0:2, 0:2]) # top-left 2x2 blockBoolean filtering and query

# Filter rows

adults = df[df["age"] >= 30]

high_scorers = df[(df["score"] >= 90) & (df["age"] < 35)]

# Using query (readable for complex filters)

top = df.query("score >= 90 and age < 35")Prefer vectorized boolean masks or query strings for speed and readability instead of loops.

Common DataFrame Operations

Add, rename, and drop columns

# Create or transform columns

df["age_group"] = pd.cut(df["age"], bins=[0, 29, 100], labels=["Young", "Adult"])

df = df.rename(columns={"score": "exam_score"})

df = df.drop(columns=["age_group"])Sorting and ranking

df_sorted = df.sort_values(by=["exam_score", "age"], ascending=[False, True])

df["rank"] = df["exam_score"].rank(ascending=False, method="dense")GroupBy and aggregation

sales = pd.DataFrame({

"region": ["US","US","EU","EU","APAC"],

"rep": ["A","B","C","D","E"],

"revenue": [100, 130, 90, 120, 150]

})

summary = sales.groupby("region")["revenue"].agg(["count","sum","mean","max"])

print(summary)GroupBy splits data into groups, applies functions, and combines results. It’s the workhorse for reporting and BI style summaries.

Apply vs vectorization

# Prefer vectorized operations

df["double_score"] = df["exam_score"] * 2

# If you must use apply, keep it simple (it’s slower)

df["pass_fail"] = df["exam_score"].apply(lambda x: "Pass" if x >= 80 else "Fail")Vectorized operations leverage C-optimized routines and are significantly faster than row wise apply.

Handling Missing Data and Types

Detect, fill, or drop missing values

df.isna().sum() # count of NaNs per column

df_filled = df.fillna({"exam_score": 0})

df_dropped = df.dropna(subset=["exam_score"])Use domain specific strategies: forward fill time series, fill with medians for skewed numeric data, or drop when appropriate.

Fix dtypes for speed and memory

# Convert strings that look like numbers

df["exam_score"] = pd.to_numeric(df["exam_score"], errors="coerce")

# Categorical for repeated strings

df["name"] = df["name"].astype("category")

# Parse datetimes

df["date"] = pd.to_datetime(df["date"], errors="coerce")Proper dtypes (especially categorical and datetime) reduce memory and unlock faster filtering and grouping.

Merging, Joining, and Concatenating

Combine multiple DataFrames just like SQL joins or stacked tables.

customers = pd.DataFrame({

"cust_id": [1,2,3],

"name": ["Ava","Ben","Chad"]

})

orders = pd.DataFrame({

"order_id": [101,102,103],

"cust_id": [1,1,3],

"amount": [39.5, 12.0, 55.0]

})

# SQL-style inner join on a key

merged = pd.merge(orders, customers, on="cust_id", how="inner")

# Concatenate rows (stack)

stacked = pd.concat([orders, orders], ignore_index=True)

# Join by index

customers_i = customers.set_index("cust_id")

orders_i = orders.set_index("cust_id")

joined = customers_i.join(orders_i, how="left")Choose inner for intersection, left to keep all from the left table, and outer for full union. Always validate key uniqueness to avoid duplication.

I/O and Serialization: CSV vs Parquet vs Feather

CSV is human readable but large and slow to parse. Parquet and Feather are binary columnar formats that load faster and use less disk.

# CSV

df.to_csv("data.csv", index=False)

# Parquet (requires pyarrow or fastparquet)

df.to_parquet("data.parquet", index=False)

# Feather (fast for Python-R interchange)

df.to_feather("data.feather")For analytics at scale, prefer Parquet or Feather to reduce I/O overhead and speed up pipelines.

Performance Tips for Large DataFrames

- Read in chunks: use read_csv with chunksize for huge files.

- Downcast numerics: convert float64/int64 to float32/int32 when safe.

- Use categorical dtype for repeated strings (e.g., country codes, product IDs).

- Prefer vectorized operations and built in aggregations over loops.

- Filter early, select only necessary columns (usecols=…).

- Cache intermediate results to Parquet for faster re-runs.

# Example: chunked CSV processing

import pandas as pd

total = 0

for chunk in pd.read_csv("events.csv", usecols=["user_id","amount"], chunksize=500_000):

chunk["amount"] = pd.to_numeric(chunk["amount"], errors="coerce")

total += chunk["amount"].sum(skipna=True)

print(total)Working with millions of rows benefits from strong compute and I/O. If you run Jupyter, Airflow, or ETL scripts in the cloud, a scalable VPS with NVMe storage helps. At YouStable, we provision SSD/NVMe VPS and managed cloud instances suitable for data workflows, so your Pandas jobs finish faster and more reliably.

Real World Examples You Can Reuse

Web log analytics (filtering and grouping)

import pandas as pd

logs = pd.read_csv("access.log.csv", usecols=["ip","status","bytes","ts"])

# Parse timestamp and filter errors

logs["ts"] = pd.to_datetime(logs["ts"])

errors = logs[logs["status"].isin([500, 502, 503, 504])]

# Error rate by minute

rate = (errors

.groupby(pd.Grouper(key="ts", freq="1min"))

.size()

.rename("errors_per_min"))

print(rate.tail())Sales analytics (joins and aggregation)

orders = pd.read_csv("orders.csv") # order_id, cust_id, amount, date

customers = pd.read_csv("customers.csv") # cust_id, segment

df = pd.merge(orders, customers, on="cust_id", how="left")

df["date"] = pd.to_datetime(df["date"])

# Revenue by segment and month

monthly = (df

.groupby([pd.Grouper(key="date", freq="MS"), "segment"])["amount"]

.sum()

.reset_index()

.sort_values(["date", "segment"]))

print(monthly.head())These patterns appear in business dashboards, anomaly detection, and capacity planning across engineering teams.

Best Practices Checklist

- Name columns clearly and consistently (snake_case recommended).

- Set index when it conveys identity or time (e.g., set a datetime index for time series).

- Validate joins: check key uniqueness and row counts after merges.

- Use Parquet for large intermediate datasets, CSV for interchange with humans.

- Convert dtypes early to avoid surprises and reduce memory.

- Favor vectorization and built in methods; avoid row wise apply in tight loops.

- Write reproducible code: pin versions and seed randomness when needed.

FAQs

What is a Pandas DataFrame used for?

A Pandas DataFrame is used for loading, cleaning, transforming, analyzing, and exporting tabular data. It supports fast filtering, joins, aggregations, time series resampling, and feature engineering for machine learning pipelines.

How do I create a DataFrame in Pandas?

You can create one from dictionaries/lists (pd.DataFrame), files like CSV or Excel (pd.read_csv, pd.read_excel), JSON (pd.read_json), or SQL (pd.read_sql). For numeric data, convert from NumPy arrays and add column names for readability.

What’s the difference between .loc and .iloc?

.loc selects by label (index/column names), while .iloc selects by integer position. Use .loc when you know the labels, and .iloc for positional slicing and numeric offsets.

How do I handle missing values in a DataFrame?

Use isna to detect, fillna to impute (with mean/median/mode or domain specific values), and dropna to remove rows/columns. For time series, forward fill or back fill often makes sense, but validate with your domain rules.

How can I speed up Pandas on large datasets?

Read in chunks, downcast numeric types, use categorical dtypes, filter early, and switch to Parquet. For larger than memory workloads, consider Dask or Polars. Running on NVMe backed VPS or cloud nodes (like those from YouStable) can also reduce I/O bottlenecks and speed up jobs.

With these foundations and examples, you can confidently use Pandas DataFrames for data wrangling, analytics, and production ETL. Keep your code clear, your dtypes correct, and your storage formats optimized for performance.