To install a load balancer on a Linux server, choose a software balancer (HAProxy or Nginx), install via your distro’s package manager, configure a frontend and backend pool with health checks and SSL, open firewall ports 80/443, then test failover. For high availability, add a second node and a virtual IP with Keepalived.

In this step-by-step guide, you’ll learn how to Install Load Balancer on Linux Server using HAProxy and Nginx, configure health checks and SSL termination, deploy high availability with Keepalived, harden security, monitor performance, and avoid common pitfalls. The instructions are beginner-friendly yet technically precise, based on real-world hosting experience.

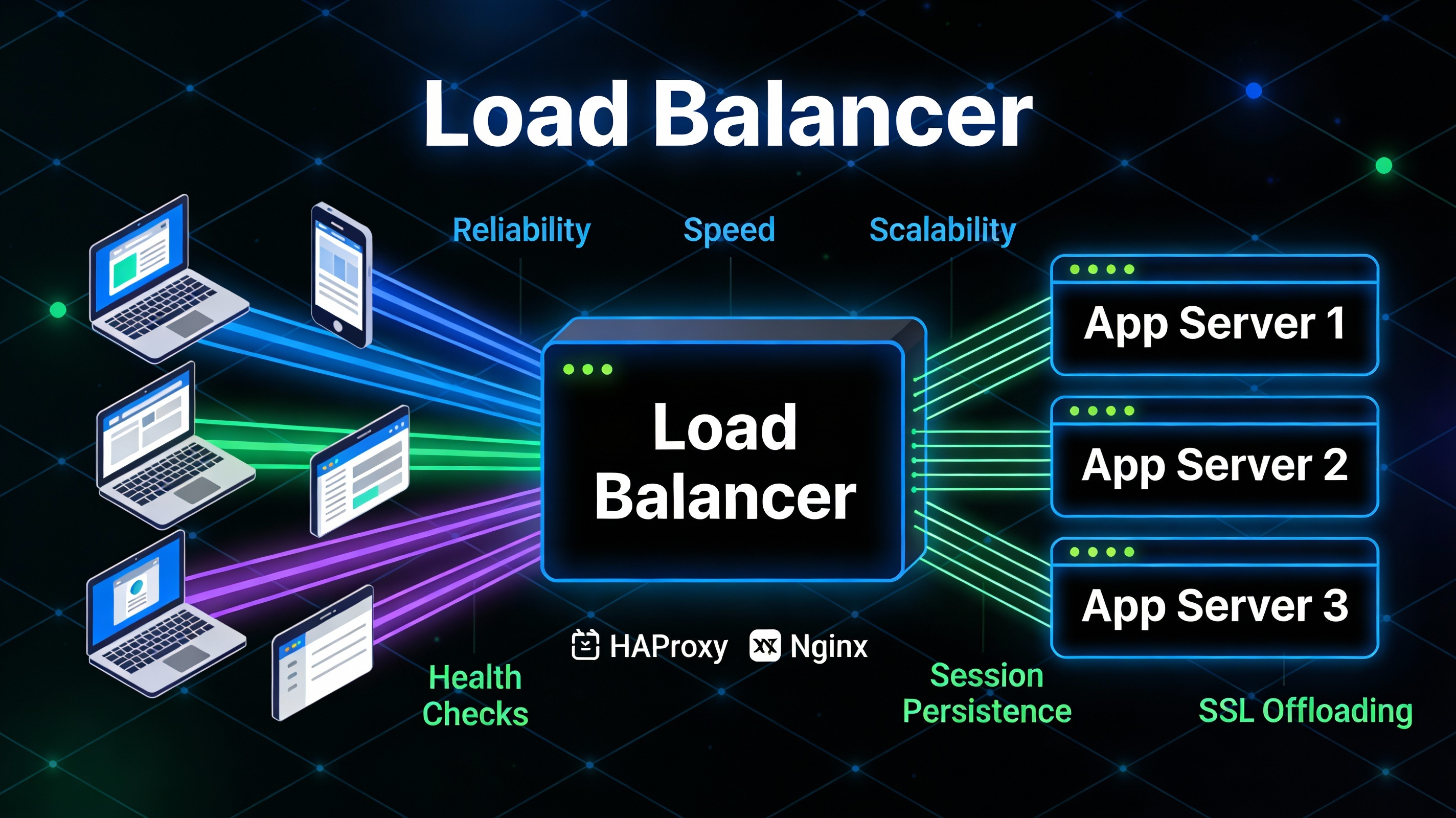

What is a Load Balancer (and Why it Matters)?

A load balancer distributes incoming traffic across multiple application servers to improve reliability, speed, and scalability. On Linux, popular open-source choices are HAProxy (high-performance L4/L7 proxy) and Nginx (web server and L7 reverse proxy). You can balance HTTP/HTTPS (Layer 7) or raw TCP (Layer 4), add health checks, session persistence, and SSL offloading.

Who Should Use This Guide

This guide is for developers, sysadmins, and site owners who want to set up a Linux load balancer in front of web apps, APIs, WordPress, or microservices. It assumes basic Linux command-line knowledge and sudo access.

Load Balancing Options on Linux

Choosing the right tool depends on your traffic profile, features, and ops preferences.

- HAProxy (recommended): Excellent performance, rich health checks, stickiness, advanced routing, TLS, observability.

- Nginx (open-source): Great as a reverse proxy/L7 balancer, simple configs, strong ecosystem; passive health checks out of the box.

- IPVS/LVS (via keepalived): Kernel-level Layer 4 load balancing for very high throughput; fewer L7 features.

For most web workloads, start with HAProxy or Nginx. If you need millions of concurrent connections with minimal overhead and simple L4 logic, consider LVS/IPVS.

Prerequisites

- Linux server: Ubuntu 22.04/24.04 or RHEL/AlmaLinux/Rocky 8/9.

- Root/sudo access and basic networking knowledge.

- At least two backend app servers to balance traffic across.

- DNS record pointing to your load balancer’s public IP (or a Floating/Virtual IP in HA setups).

- Firewall access to open ports 80/443 (and 8404/9000 for stats, optional).

Quick Decision: HAProxy vs Nginx

- Pick HAProxy if you need advanced health checks, detailed metrics, stick tables, or heavy SSL/TLS offload.

- Pick Nginx if you prefer a familiar web server + proxy stack or you’re already using it for static content and caching.

Install HAProxy on Ubuntu/Debian

Use apt to install, then configure frontends/backends, health checks, and optional SSL termination.

sudo apt update

sudo apt install -y haproxy

# Enable HAProxy at boot

sudo systemctl enable haproxy

# Verify version

haproxy -vBasic HTTP load balancing with round-robin and health checks:

# /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

maxconn 50000

user haproxy

group haproxy

daemon

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5s

timeout client 50s

timeout server 50s

option http-server-close

option redispatch

retries 3

frontend fe_http

bind *:80

mode http

option forwardfor

default_backend be_app

backend be_app

mode http

balance roundrobin

option httpchk GET /health

http-check expect status 200

server app1 10.0.0.11:80 check

server app2 10.0.0.12:80 check

listen stats

bind *:8404

mode http

stats enable

stats uri /stats

stats refresh 10ssudo haproxy -c -f /etc/haproxy/haproxy.cfg # syntax check

sudo systemctl restart haproxy

sudo systemctl status haproxy --no-pagerAdd HTTPS (SSL termination) with Let’s Encrypt or your own certificate bundle:

# Concatenate fullchain + private key into a single PEM (PEM must include key)

sudo mkdir -p /etc/haproxy/certs

sudo cat /etc/letsencrypt/live/example.com/fullchain.pem /etc/letsencrypt/live/example.com/privkey.pem | sudo tee /etc/haproxy/certs/example.com.pem > /dev/null

sudo chmod 600 /etc/haproxy/certs/example.com.pem

# Update haproxy.cfg to add HTTPS frontend

frontend fe_https

bind *:443 ssl crt /etc/haproxy/certs/example.com.pem alpn h2,http/1.1

mode http

redirect scheme https code 301 if !{ ssl_fc }

option forwardfor

http-response set-header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

default_backend be_app

sudo haproxy -c -f /etc/haproxy/haproxy.cfg

sudo systemctl reload haproxyOptional: enable sticky sessions for stateful apps (e.g., legacy PHP sessions):

backend be_app

mode http

balance roundrobin

cookie SRV insert indirect nocache

option httpchk GET /health

server app1 10.0.0.11:80 check cookie s1

server app2 10.0.0.12:80 check cookie s2Install HAProxy on RHEL/AlmaLinux/Rocky

sudo dnf install -y haproxy

sudo systemctl enable haproxy

sudo systemctl start haproxy

# SELinux: allow HAProxy to make outbound connections if needed

sudo setsebool -P haproxy_connect_any 1

# Firewalld: open HTTP/HTTPS and stats

sudo firewall-cmd --add-service=http --permanent

sudo firewall-cmd --add-service=https --permanent

sudo firewall-cmd --add-port=8404/tcp --permanent

sudo firewall-cmd --reloadInstall Nginx as a Load Balancer (Ubuntu/RHEL)

Nginx is great for Layer 7 balancing and reverse proxying. It uses passive health checks by default (fails over when upstream errors occur).

# Ubuntu/Debian

sudo apt update && sudo apt install -y nginx

sudo systemctl enable nginx

# RHEL family

sudo dnf install -y nginx

sudo systemctl enable --now nginx

sudo firewall-cmd --add-service=http --add-service=https --permanent

sudo firewall-cmd --reloadCreate an upstream and server block for HTTP load balancing:

# /etc/nginx/conf.d/lb.conf

upstream app_backend {

least_conn;

server 10.0.0.11:80 max_fails=3 fail_timeout=30s;

server 10.0.0.12:80 max_fails=3 fail_timeout=30s;

keepalive 64;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://app_backend;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_http_version 1.1;

proxy_set_header Connection "";

}

location = /health {

access_log off;

add_header Content-Type text/plain;

return 200 "OK";

}

}sudo nginx -t

sudo systemctl reload nginxFor HTTPS, install certificates with Certbot or your provider, or terminate TLS on Nginx and forward plain HTTP to backends.

High Availability: Add a Virtual IP with Keepalived

To avoid a single point of failure, deploy two load balancer nodes (LB1 and LB2) and float a Virtual IP (VIP) between them using VRRP (Keepalived). If LB1 fails, LB2 takes over automatically.

# Install Keepalived

# Ubuntu/Debian

sudo apt install -y keepalived

# RHEL family

sudo dnf install -y keepalived

# Allow binding to VIP before assignment (recommended on the LB that runs HAProxy/Nginx)

echo "net.ipv4.ip_nonlocal_bind = 1" | sudo tee /etc/sysctl.d/99-nonlocalbind.conf

sudo sysctl --systemExample Keepalived config (use different priorities on each node; higher wins):

# /etc/keepalived/keepalived.conf (LB1 - MASTER)

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass StrongSecret123

}

virtual_ipaddress {

203.0.113.50/32 dev eth0

}

track_script {

chk_haproxy

}

}

vrrp_script chk_haproxy {

script "pidof haproxy"

interval 2

weight -30

}# /etc/keepalived/keepalived.conf (LB2 - BACKUP)

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass StrongSecret123

}

virtual_ipaddress {

203.0.113.50/32 dev eth0

}

track_script {

chk_haproxy

}

}

vrrp_script chk_haproxy {

script "pidof haproxy"

interval 2

weight -30

}sudo systemctl enable --now keepalived

ip addr show dev eth0 | grep 203.0.113.50 # VIP should appear on MASTER

# Open VRRP (protocol 112) if your firewall filters it (most clouds allow by default)Point your DNS A/AAAA records to the VIP. Test failover by stopping HAProxy or Keepalived on LB1; the VIP should move to LB2 within seconds.

Security Hardening

- Firewall: Allow only 80/443 (and 22 from your IP). Restrict stats endpoints by IP or auth.

- TLS: Use modern ciphers, enable HTTP/2, HSTS, OCSP stapling, and auto-renew Let’s Encrypt.

- Headers: Set X-Frame-Options, X-Content-Type-Options, Referrer-Policy, Content-Security-Policy (as your app allows).

- Least privilege: Run services as non-root where supported; keep packages updated.

- SELinux/AppArmor: Keep enforcing and set required booleans (e.g., haproxy_connect_any).

Monitoring and Logging

- HAProxy: Enable stats endpoint and Prometheus exporter (haproxy_exporter). Log to syslog, parse with ELK or Loki.

- Nginx: Access/error logs, plus stub_status or nginx-exporter for Prometheus.

- Synthetic checks: Uptime monitoring on VIP and backends.

- Capacity: Watch CPU, memory, file descriptors, and connection counts.

Testing Your Load Balancer

- Basic reachability: curl -I http://example.com or https://example.com

- Header forwarding: curl -I -H “Host: example.com” http://VIP

- Distribution: Temporarily add a response header on each backend to identify servers, then refresh.

- Load: ab -n 1000 -c 50 https://example.com or siege -c50 -t1M https://example.com

- Failover: Stop one backend; traffic should shift without errors.

# Quick troubleshooting helpers

sudo ss -ltnp | grep -E "80|443|8404"

journalctl -u haproxy -f

journalctl -u nginx -f

journalctl -u keepalived -f

curl -I http://127.0.0.1:8404/stats

nginx -t && haproxy -c -f /etc/haproxy/haproxy.cfgCommon Pitfalls (and Fixes)

- SSL mismatch: Ensure fullchain + key order is correct in HAProxy PEM; reload after renewal.

- Health checks fail: Backends must expose a 200 OK endpoint (e.g., /health). Protect it internally if public.

- Sticky sessions not working: Confirm cookie insertion and that app honors it; avoid caching layers stripping cookies.

- Proxy headers: Always forward Host and X-Forwarded-* headers to keep app URLs and HTTPS detection correct.

- Firewall blocks: Open ports on OS and cloud security group. VRRP (proto 112) must flow between LBs.

- VIP binding errors: Set net.ipv4.ip_nonlocal_bind=1 on nodes that run proxies before VIP attaches.

Real-World Scenarios

- WordPress at scale: Terminate TLS on HAProxy, cache static assets at Nginx behind it or a CDN, and enable sticky sessions only if your PHP session store isn’t centralized (better: Redis for sessions).

- API microservices: Use HAProxy with path- or host-based routing to multiple backends. Add rate limiting and circuit breakers using stick tables.

- Zero-downtime deploys: Add new backend nodes, drain old ones (set weight 0 or disable), then remove them after connections complete.

YouStable Tip: Infrastructure That Simplifies Load Balancing

If you prefer not to manage every piece yourself, YouStable’s cloud VPS with dedicated vCPUs, generous bandwidth, and optional floating IPs make HAProxy/Nginx clusters straightforward. Our support team can guide best practices for SSL, security, and high availability without vendor lock-in.

SEO-Friendly Summary: How to Install Load Balancer on Linux Server

- Choose tool: HAProxy (advanced L4/L7) or Nginx (L7 reverse proxy).

- Install via apt/dnf and enable service.

- Configure frontend: 80/443 with health checks, headers, and SSL termination.

- Define backends: Server pool with checks and optional sticky sessions.

- Secure: Harden TLS, firewall, and admin endpoints.

- Scale and HA: Add second LB and Keepalived VIP.

- Monitor and test: Metrics, logs, and synthetic checks.

FAQ’s: Install Load Balancer on Linux Server

Which is better for Linux load balancing: HAProxy or Nginx?

For most production environments, HAProxy offers richer health checks, advanced routing, and detailed metrics. Nginx is excellent if you already use it as a web server and need simple L7 balancing. Both handle high traffic well; choose based on feature needs and team familiarity.

What’s the difference between Layer 4 and Layer 7 load balancing?

Layer 4 (TCP/UDP) forwards connections without inspecting HTTP. It’s fast and efficient (e.g., IPVS, HAProxy in TCP mode). Layer 7 understands HTTP/HTTPS, enabling path-based routing, header manipulation, cookies, and SSL termination. Use L7 for web apps that need smarter routing.

How do I add SSL to my Linux load balancer?

Terminate TLS at the load balancer with Let’s Encrypt or provider-issued certs. In HAProxy, use a PEM containing fullchain and private key. In Nginx, set ssl_certificate and ssl_certificate_key. Enforce strong ciphers, HTTP/2, and HSTS, and automate renewal.

How can I make the load balancer highly available?

Deploy at least two load balancer nodes and float a Virtual IP using Keepalived/VRRP. Point DNS to the VIP. If the primary LB fails, the backup takes over automatically. Ensure both nodes share certificates and configuration via automation.

Do I need sticky sessions for WordPress or PHP apps?

Prefer centralized sessions (Redis/Memcached) so any backend can serve any request. If that’s not possible, enable sticky sessions in HAProxy (cookie) or Nginx (ip_hash) as a stopgap. Long term, externalize sessions and uploads to avoid coupling users to a single node.

How do I monitor a Linux load balancer?

Use HAProxy’s stats page and Prometheus haproxy_exporter, or Nginx logs with nginx-exporter/stub_status. Track response times, errors, connection counts, and backend health. Centralize logs and set alerts on VIP reachability and backend failures.

Can I use the same server for load balancing and web hosting?

You can, but separating roles improves reliability, security, and scaling. For small sites, a single Nginx can serve static content and proxy to app servers. For larger stacks, dedicate the load balancer and scale backends independently, ideally on cloud VPS like YouStable.

Next Steps

- Start with HAProxy on a Linux VPS and configure health checks.

- Add TLS termination and hardened headers.

- Deploy a secondary LB and Keepalived for HA.

- Automate configuration and certificate sync with Ansible.

- Monitor with Prometheus/Grafana and test failover regularly.

With the steps above, you can confidently Install Load Balancer on Linux Server, scale your applications, and maintain high uptime. If you want a faster start, YouStable’s VPS platform and expert guidance can help you deploy a resilient load-balanced architecture in hours, not days.