To install HAProxy on a Linux server, update your OS packages, install the haproxy package from your distro’s repository, enable and start the service, then configure /etc/haproxy/haproxy.cfg with frontend and backend sections. Verify the configuration with haproxy -c, open firewall ports 80/443, and reload HAProxy for changes to take effect.

In this guide, you’ll learn how to Install HAProxy on Linux Server step by step, configure it as a high‑performance load balancer and reverse proxy, enable SSL/TLS, tune performance, and troubleshoot common issues. I’ll keep it beginner‑friendly while adding real‑world tips from 12+ years of hosting and infrastructure experience.

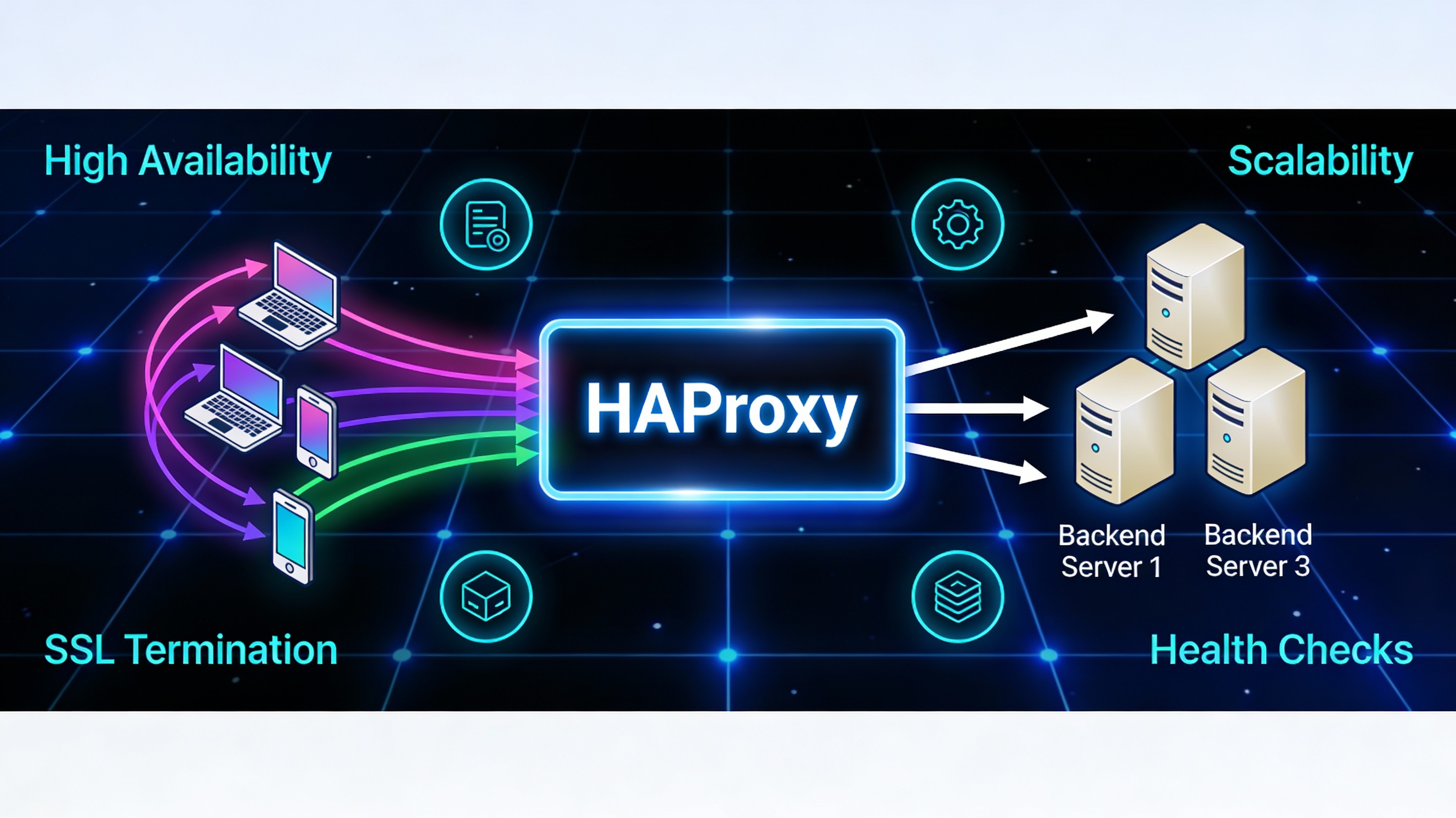

What is HAProxy and Why Use it?

HAProxy (High Availability Proxy) is a fast, reliable TCP/HTTP load balancer and reverse proxy. It distributes client traffic across multiple backend servers, improving availability, scalability, and security. It’s widely used by high‑traffic websites due to its efficiency, flexible ACLs, health checks, SSL termination, and observability.

Who is This Guide For?

Ideal for developers, sysadmins, and website owners wanting to deploy a load balancer on Ubuntu/Debian or RHEL/CentOS/Rocky/AlmaLinux. We’ll cover installation, configuration, SSL, logging, and best practices with examples you can copy-paste.

Prerequisites

- Linux server (Ubuntu 22.04/24.04, Debian 12, Rocky/AlmaLinux 8/9, or CentOS 7/Stream)

- Root or sudo access

- Domain name (for SSL section)

- Firewall access to open ports 80 and 443

- Two or more backend web servers for load balancing (optional for basic reverse proxy)

Quick Steps Overview

- Install HAProxy using your package manager

- Enable and start the service

- Edit /etc/haproxy/haproxy.cfg

- Validate configuration and restart

- Open firewall ports (80/443)

- Optionally enable SSL termination and health checks

Install HAProxy on Ubuntu/Debian

Ubuntu and Debian include up-to-date HAProxy builds in the default repositories. Use the following commands:

sudo apt update

sudo apt install -y haproxy

haproxy -v

# Enable and start on boot

sudo systemctl enable --now haproxy

# Check status and logs

systemctl status haproxy

journalctl -u haproxy -fInstall HAProxy on RHEL/CentOS/Rocky/AlmaLinux

On RHEL-compatible systems, HAProxy is available via the AppStream/BaseOS repositories. For older systems, EPEL may be required.

# Rocky/AlmaLinux/RHEL 8/9

sudo dnf install -y haproxy

haproxy -v

# CentOS 7

sudo yum install -y haproxy

# Enable and start

sudo systemctl enable --now haproxy

systemctl status haproxy

journalctl -u haproxy -fUnderstand the HAProxy Configuration Layout

HAProxy reads from /etc/haproxy/haproxy.cfg. Config sections include:

- global: process-wide settings (logging, threads, SSL defaults)

- defaults: default parameters for frontends/backends (timeouts, mode)

- frontend: where clients connect (bind address/port, ACLs, rules)

- backend: pool of servers to route requests to

- listen: combined frontend+backend (useful for TCP or simple setups)

Basic HTTP Load Balancing Configuration (Example)

Below is a minimal yet production-friendly configuration for HTTP traffic on port 80, balancing to two backend web servers. It includes useful timeouts and a stats page.

global

log /dev/log local0

log /dev/log local1 notice

maxconn 50000

user haproxy

group haproxy

daemon

# Use threads for modern multi-core servers

nbthread 2

# Stronger defaults for TLS later

tune.ssl.default-dh-param 2048

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5s

timeout client 30s

timeout server 30s

timeout http-request 10s

retries 3

frontend fe_http

bind *:80

# Redirect HTTP to HTTPS if SSL is configured; keep commented for now

# http-request redirect scheme https code 301 unless { ssl_fc }

default_backend be_app

backend be_app

balance roundrobin

option httpchk GET /health

http-check expect rstring OK

server app1 10.0.0.11:80 check

server app2 10.0.0.12:80 check

listen stats

bind :8404

mode http

stats enable

stats uri /stats

stats refresh 10s

# Protect with simple auth (adjust credentials)

stats auth admin:StrongPasswordHereValidate the configuration before reloading:

sudo haproxy -c -f /etc/haproxy/haproxy.cfg

sudo systemctl restart haproxyAdd SSL/TLS Termination (HTTPS) with Let’s Encrypt

HAProxy terminates TLS efficiently. The simplest approach is to obtain a certificate via Let’s Encrypt, convert it to a PEM bundle, and reference it in a TLS-enabled frontend.

Step 1: Obtain a Certificate

If port 80 is free (or you can stop HAProxy briefly), use the standalone method. Replace example.com with your domain.

sudo systemctl stop haproxy

sudo apt install -y certbot || sudo dnf install -y certbot

sudo certbot certonly --standalone -d example.com -d www.example.com

# Certificates land under /etc/letsencrypt/live/example.com/Step 2: Create a PEM Bundle for HAProxy

sudo mkdir -p /etc/haproxy/certs

sudo cat /etc/letsencrypt/live/example.com/fullchain.pem \

/etc/letsencrypt/live/example.com/privkey.pem | sudo tee /etc/haproxy/certs/example.com.pem > /dev/null

sudo chmod 600 /etc/haproxy/certs/example.com.pemStep 3: Add an HTTPS Frontend

frontend fe_https

bind *:443 ssl crt /etc/haproxy/certs/example.com.pem alpn h2,http/1.1

mode http

option httplog

default_backend be_app

# Optional: Redirect HTTP to HTTPS

frontend fe_http

bind *:80

http-request redirect scheme https code 301 unless { ssl_fc }

default_backend be_appReload and test:

sudo haproxy -c -f /etc/haproxy/haproxy.cfg

sudo systemctl restart haproxy

curl -I https://example.comAutomate renewal by adding a post-renewal hook to rebuild the PEM and reload HAProxy:

sudo bash -c 'cat > /etc/letsencrypt/renewal-hooks/deploy/haproxy.sh' << "EOF"

#!/usr/bin/env bash

DOMAIN="example.com"

cat /etc/letsencrypt/live/$DOMAIN/fullchain.pem /etc/letsencrypt/live/$DOMAIN/privkey.pem > /etc/haproxy/certs/$DOMAIN.pem

chmod 600 /etc/haproxy/certs/$DOMAIN.pem

systemctl reload haproxy

EOF

sudo chmod +x /etc/letsencrypt/renewal-hooks/deploy/haproxy.shAdd Health Checks, Algorithms, and Sticky Sessions

Load Balancing Algorithms

- roundrobin: default, rotates evenly

- leastconn: directs to server with fewest connections (great for slow requests)

- source: hash based on client IP (primitive stickiness)

Health Checks

Use option httpchk to probe a health endpoint and mark servers up/down automatically.

backend be_app

balance leastconn

option httpchk GET /health

http-check expect status 200

server app1 10.0.0.11:80 check

server app2 10.0.0.12:80 checkSticky Sessions (Session Persistence)

When apps store session in memory, enable sticky sessions via cookies:

backend be_app

balance roundrobin

cookie SRV insert indirect nocache

server app1 10.0.0.11:80 check cookie s1

server app2 10.0.0.12:80 check cookie s2Enable Logging and Observability

HAProxy logs to syslog. On Ubuntu/Debian, rsyslog is configured by default. On RHEL-based systems, enable UDP reception if logs don’t appear:

sudo bash -c 'cat > /etc/rsyslog.d/49-haproxy.conf' << "EOF"

$ModLoad imudp

$UDPServerRun 514

local0.* /var/log/haproxy.log

local1.notice /var/log/haproxy-admin.log

EOF

sudo systemctl restart rsyslog

sudo systemctl reload haproxyFor metrics, expose the HAProxy stats page or use a Prometheus exporter that scrapes the stats socket.

Open Firewall Ports and Configure SELinux

Allow HTTP/HTTPS traffic:

# UFW (Ubuntu)

sudo ufw allow 80/tcp

sudo ufw allow 443/tcp

sudo ufw reload

# firewalld (RHEL family)

sudo firewall-cmd --permanent --add-service=http

sudo firewall-cmd --permanent --add-service=https

sudo firewall-cmd --reloadIf SELinux is enforcing and HAProxy must connect to any port, set this boolean:

sudo setsebool -P haproxy_connect_any=1Performance Tuning Essentials

- Threads: Set nbthread to match CPU cores (start with 2–4).

- Connections: Increase maxconn in global and per-frontend/backends.

- Kernel queues: Raise net.core.somaxconn and fs.file-max if you hit limits.

- Timeouts: Tune timeouts (connect/client/server) based on your app’s behavior.

- SSL: Use alpn h2,http/1.1 and tune.ssl.default-dh-param 2048 or 3072 for strong security.

# Example sysctl tuning

sudo bash -c 'cat >> /etc/sysctl.d/99-haproxy.conf' << "EOF"

net.core.somaxconn = 65535

net.ipv4.ip_local_port_range = 10240 65535

fs.file-max = 2097152

EOF

sudo sysctl --systemTroubleshooting Common HAProxy Issues

- Cannot bind socket [0.0.0.0:80/443]: Another service (like Nginx or Apache) is using the port. Stop/disable it or change HAProxy’s bind.

- 503 Service Unavailable: Health checks failing or no available backends. Verify /health endpoint, firewall rules, and backend IPs.

- SSL handshake errors: Wrong certificate path or permissions. Rebuild PEM and ensure chmod 600.

- No logs: Ensure rsyslog is running and /etc/rsyslog.d/49-haproxy.conf is loaded.

- High latency: Switch to leastconn, enable keep-alive, and review backend app performance.

Real-World Use Cases

- Scale WordPress or PHP apps horizontally with two or more backend servers.

- Act as a secure reverse proxy in front of microservices, Node.js, or Python apps.

- Terminate SSL centrally and forward HTTP to internal services.

- Blue/green deployments with ACLs and map files to control traffic shifts.

- API gateways with rate limiting (via stick-tables) and fine-grained ACLs.

When to Choose HAProxy vs. Nginx

- Choose HAProxy for: high connection rates, advanced L4/L7 load balancing, detailed health checks, stick-tables, and large-scale traffic.

- Choose Nginx for: combined web server + proxy with static file serving and simpler configs.

Soft Recommendation: Managed HAProxy with YouStable

If you prefer a done-for-you setup, YouStable can provision optimized VPS or cloud instances with HAProxy pre-installed, SSL configured, and monitoring enabled. Our engineers help you choose the right balancing strategy and ensure uptime and speed for your workloads.

Complete Starter Configuration You Can Adapt

Use this as a base and adjust backend IPs, domain names, and timeouts to match your environment.

global

log /dev/log local0

log /dev/log local1 notice

maxconn 100000

user haproxy

group haproxy

daemon

nbthread 4

tune.ssl.default-dh-param 2048

defaults

log global

mode http

option httplog

option forwardfor

option http-server-close

timeout connect 5s

timeout client 60s

timeout server 60s

timeout http-request 10s

retries 3

frontend fe_http

bind *:80

http-request redirect scheme https code 301 unless { ssl_fc }

default_backend be_app

frontend fe_https

bind *:443 ssl crt /etc/haproxy/certs/example.com.pem alpn h2,http/1.1

http-response set-header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

default_backend be_app

backend be_app

balance leastconn

option httpchk GET /health

http-check expect status 200

cookie SRV insert indirect nocache

server app1 10.0.0.11:80 check cookie s1

server app2 10.0.0.12:80 check cookie s2

listen stats

bind :8404

mode http

stats enable

stats uri /stats

stats refresh 10s

stats auth admin:StrongPasswordHereFAQ’s: Install HAProxy on Linux Server

How do I install HAProxy on Ubuntu or Debian?

Run sudo apt update && sudo apt install -y haproxy, then enable and start it with sudo systemctl enable –now haproxy. Edit /etc/haproxy/haproxy.cfg, validate with haproxy -c, and restart the service.

How do I configure HAProxy as a reverse proxy?

Create a frontend to bind on 80/443 and a backend that points to your app servers. Set default_backend in the frontend and use balance, server, and health checks in the backend. Optionally add SSL termination and redirects.

What is the best load-balancing algorithm for web apps?

Start with leastconn for dynamic, variable traffic and slow endpoints; it tends to lower queue times. Use roundrobin for uniform workloads and source for simple IP-based stickiness if your app requires session affinity.

How do I enable SSL in HAProxy?

Obtain a certificate (e.g., via Let’s Encrypt), concatenate fullchain.pem and privkey.pem into a single PEM file, then reference it in a frontend bind line: bind *:443 ssl crt /etc/haproxy/certs/domain.pem alpn h2,http/1.1.

Why am I getting 503 errors from HAProxy?

503 indicates no healthy backends are available. Check backend IPs/ports, ensure the health check path returns 200, confirm firewall rules, and inspect logs via journalctl -u haproxy or /var/log/haproxy.log.

Can HAProxy handle HTTP/2 and HTTP/3?

HAProxy supports HTTP/2 over TLS with ALPN (alpn h2,http/1.1). HTTP/3 support can be fronted by a QUIC proxy or CDN in front of HAProxy until native support reaches your distribution’s build.

Is HAProxy better than Nginx for load balancing?

For pure load balancing at scale, HAProxy often edges out due to advanced health checks, stick-tables, and performance under high concurrency. Nginx is excellent too, especially when you also need a static web server. Choose based on features and ecosystem fit.

Conclusion

You’ve learned how to Install HAProxy on Linux Server, configure frontends/backends, enable SSL, tune performance, and troubleshoot errors. Start simple, validate configurations often, and iterate based on real traffic. If you want a streamlined, managed setup, YouStable can deploy and maintain HAProxy for you on optimized infrastructure.