Learning to understand Docker on a Linux server is a vital step for anyone aiming to modernize how applications are built, shipped, and run. Docker revolutionizes software delivery by using container technology, which simplifies both development and operations, ensuring your apps always run the same, no matter the underlying environment.

This approachable guide explains what Docker is, how it works, why it matters, and how it changes the way you manage applications on Linux.

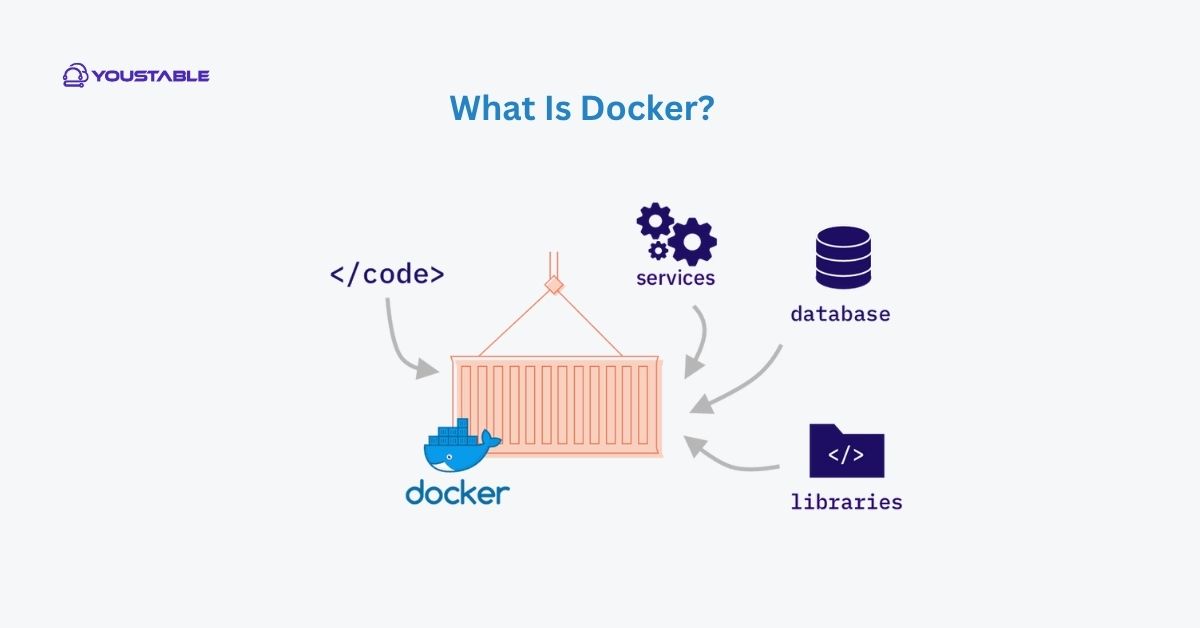

What Is Docker?

Docker is an open-source platform for developing, shipping, and running applications inside containers. At its core, Docker allows you to package software along with all necessary dependencies and system tools into a unit called a container. Containers are:

- Lightweight: Share the host system’s kernel but run independently.

- Portable: Run on any system with Docker installed—Linux, Windows, or cloud platforms.

- Isolated: Each container operates in its secure environment, minimizing conflicts.

- Consistent: Containers guarantee the app runs the same in development, testing, and production.

This design ensures that developers and system administrators can move and scale workloads reliably, without the “it works on my machine” issues.

What are Containers in Docker?

A container is a standard, runnable software unit that includes the application code, its libraries, and dependencies, all wrapped into one package. Here’s what sets containers apart:

- Isolation: Each container is sandboxed at the OS level via features like cgroups and namespaces.

- Efficiency: Multiple containers can run on a single Linux host, sharing system resources.

- Immutability: Once a container image is created, it doesn’t change; this makes deployments predictable.

- Lifecycle: Containers are built from images, run as isolated processes, and can be easily started, stopped, or removed.

Think of containers as mini–virtual machines, but much more efficient and faster to start up.

Key Docker Concepts Explained

Understanding Docker involves getting comfortable with several core terms:

| Term | Description |

|---|---|

| Image | A read-only template with the app’s code, libraries, and settings. Images are used to create containers. |

| Container | A live, runnable instance of an image—application code actually running, in isolation. |

| Dockerfile | The script that defines instructions to build an image. |

| Volume | Special storage for persisting data beyond the lifecycle of a container. |

| Registry | Collections of images (like Docker Hub) from which images can be downloaded. |

How Docker Works on Linux

Docker installs a Docker Engine on Linux, which interacts directly with the Linux kernel to support features like process isolation and networking. Here’s how it fits together:

- Build an Image: Use a Dockerfile to assemble your application’s environment—operating system libraries, language runtimes, configs, and your code.

- Run a Container: Launch an isolated process with

docker run, which starts a fresh, sandboxed environment using an existing image.

Example:

docker run hello-worldThis command downloads a tiny “hello-world” image from Docker Hub and runs it, confirming Docker works.

- Networking and Port Mapping: By default, containers are isolated and inaccessible from the outside. You must explicitly connect them using the

-pflag, which maps host ports to container ports (allowing, for example, external web access).

Example:

docker run -d -p 80:80 nginxThis runs an NGINX web server and makes it accessible on your host’s port 80.

- Data Persistence: Store files outside the container’s sandbox using volumes.

docker run -v /host/data:/container/data myimageNow data in /host/data on the Linux server persists even if you delete and recreate the container.

Why Docker Matters for Linux Servers

Docker has revolutionized how applications are deployed and managed on Linux servers. By using containerization, it offers a lightweight, consistent, and scalable solution that streamlines development, testing, and production environments. Here’s why Docker is a game-changer for Linux-based infrastructure:

- Rapid Deployment: Launch new application environments in seconds, not minutes or hours.

- Scalability: Easily spin up more containers to scale apps horizontally.

- Simplified CI/CD: Integrates smoothly with DevOps workflows—build an image once and deploy it anywhere.

- Version Control: Revert, branch, and test different application versions with ease thanks to images and tags.

- Environment Consistency: No more “works on my machine” problems; containers ensure uniform behavior.

Practical Workflows with Docker

Once you understand Docker’s philosophy, workflows become predictable:

- Develop Locally: Package your app in a container, exactly mirroring production.

- Test in Isolation: Run containers for different services (web, app, database) without conflicts.

- Deploy Anywhere: Use the same image locally, on your test server, or in the cloud.

Frequently Asked Questions

How is Docker different from a virtual machine (VM) on Linux?

Docker containers share the Linux kernel with the host and other containers, making them lightweight and fast to start. VMs emulate entire operating systems—including virtualized hardware—causing higher resource consumption and slower boot times. With Docker, multiple applications can run isolated on the same OS kernel, maximizing efficiency and reducing overhead.

Is Docker only useful for web applications, or can I run other workloads?

Docker is completely versatile. While widely used for web apps, you can containerize databases, batch jobs, command-line utilities, background workers, and even graphical applications. Any process that runs on Linux can generally be placed in a container, given the correct image and dependencies.

How do I ensure data in my Docker containers is safe and not lost?

By default, data created inside a container disappears when the container is removed. To persist data—such as databases or uploaded files—use Docker volumes or bind mounts, which store files on the host system. Always configure volumes for essential data so it isn’t lost during rebuilds and upgrades.

Conclusion

To understand Docker on a Linux server is to embrace a new era of flexible, efficient, and reliable application deployment. With Docker’s containers, you gain speed, portability, and isolation—streamlining how you build and run software on Linux. Explore Docker’s potential to simplify operations and modernize your infrastructure. For in-depth technical documentation, visit the official Docker documentation.